Introduction

PyDog is a tool similar to cron with the following additions:

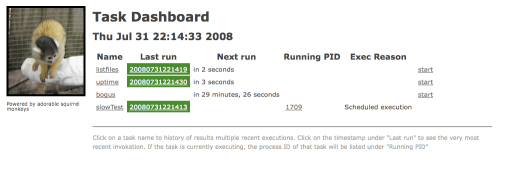

- It includes a web front end which includes a quick dashboard view.

- Output is logged to files instead of being sent via mail.

- Notification system to tell users when a process has failed.

- A history of past output is saved and can be browsed.

It was written in an environment where we had various systems and infrastructure that I wanted to monitor. In the beginning, we used cron, but this had it's headaches. For example:

- if a system went offline, we'd get spammed with notifications until it came up.

- We had to carefully write our scripts to not emit any output unless there was a problem. However, if we wanted to log informational messages, we had to write them to a seperate file. (This required us to come up with some adhoc conventions that would often fail at the worst time.)

- If the script was being spawned every 1 minute, but took 2 minutes to run, processes would accumulate.

Around the same time, I was impressed by the simplicity of CruiseControl, a tool for automating continous builds. It had hooks for various notification mechanisms, capturing output from jobs, and had the concept of success or failure.

However, after further consideration, I realized that CruiseControl's design didn't seem like a good fit. While possible, my needs were very specific and very simple. So, I attempted to keep it simple and start from scratch, quickly building the project for my exact usage patterns.

Screenshots

This is the main dashboard. From here you can see the status of all processes and a red/green indicator to see if the last run was successful or not. Clicking on a process name takes you to the history for that process where you can download artifacts from previous runs.

Installation

Honestly, this is of a pain and it's likely due to my own ignorance of how to organize python projects. I'm not entirely sure where to find best practices for installing python packages are. In particular, I worry about installing python packages globally. If anyone can point me to what a good example or practices for bundling python apps please email me at pgm-at-lazbox.org. I'd be interested to learn the right way to do this.

In the mean time, here are some complicated instructions

Download the following 3rd party packages:

Put

CherryPy-3.0.2/cherrypy,

PyYAML-3.05/lib/yaml, and

Cheetah-2.0rc8/srcunder

src. The src directory should look something like:

-rw-r--r-- 1 pgm staff 191 Oct 22 2007 config.yaml -rw-r--r-- 1 pgm staff 1141 Feb 25 20:41 delayedEventService.py -rw-r--r-- 1 pgm staff 701 Feb 25 21:08 eventqueue.py drwxr-xr-x 5 pgm staff 170 Jul 31 21:43 cherrypy drwxr-xr-x 11 pgm staff 374 Nov 15 2007 yaml drwxr-xr-x 11 pgm staff 374 Dec 1 2007 Cheetah ...etc...Now, just run

python ui.pyto start the webserver. You should see a message like:

[31/Jul/2008:22:13:01] HTTP Serving HTTP on http://0.0.0.0:9080/

At which point you can point your browser to http://localhost:9080 to see the interface.

Writing a Script for PyDog

The goal was to keep it as easy as possible to write scripts so the conventions are extremely simple.

- Any command line can be run.

- This command can write to stderr and/or stdout and the output will be captured into two seperate files.

- Each time the script is run, a new directory will be created and the sub-process will execute within that directory.

The directories that are created can be browsed from the dashboard. Scripts are welcome to drop files into the current working directory. Any files in that directory can be downloaded/viewed from the dashboard UI. This is also where the captured stderr and stdout output are stored.

To indicate a failure, the script should exit with a non-zero return code. Fortuantely, most scripting languages do this when they encounter a run-time exception anyway, so this will naturally happen if there is a mistake in the script.

Best Practices

More to be written here

Configuration Reference

All settings are controlled by a pydog.yaml file which is read at startup.

To illustrate configuration, we'll walk through a few examples

Global options

There are are few global options that are not associated with any task

rootJobDir: 'tasks' stateFile: 'lastState.ser' port: 9080

The meanings of these parameters are:

- rootJobDir The directory to store the history in. This is also where the subdirectories created per each executed job are put

- stateFile Path to file where pydog save its state file. This state file is used to keep track of which executions were successful or failures and other information in case pydog is restarted. Deleting this file will reset the state and it will be created anew on next execution.

- port The port the webserver should listen on

The "tasks" section

All processes are defined under the tasks section. The example below shows two tasks named "listfiles" and "slowTest".

tasks:

- command: ls -al

frequency: 15

name: listfiles

notifier: {type: console}

- command: bash slowScript.sh

crontime: '* * 2 0 *'

name: slowTest

notifier:

type: email

server: smtp.sample.com

fromAddress: pydog-no-reply@sample.com

toAddresses: [pgm@sample.com]

url: http://node016:9280

nospam: 1

The first command is set to run periodically by specifying a "frequency" parameter. In this case it is saying this command should be executed every 15 seconds.

If you don't care exactly when the job is run, it's best to use the frequency. This is also has the advantage that the timer to run next time is reset after each execution. So, if the length of time for the execution is unknown, this is a good option to use.

The second command is configured to run at a set time each day. The syntax is the same format of cron. In this case it is saying to run this command every day at 2am. Also in the 2nd task, it is configured to send an email notifying whether the job was successful or failed. The nospam option further signifies that an email should only be sent on a change of status. (That is to say either a job which was previously successful justed failed or something that previously failed just executed successfully.)